Date

Visit site

Problem

The deep web refers to the part of the internet that is not indexed or easily accessible through traditional search engines. It includes websites and content that are not visible to the general public. While the deep web itself is not inherently malicious, it can provide an environment where cyber attacks are organized and executed.

In the deep web, cyber attackers take advantage of the anonymity it offers to plan and orchestrate various types of attacks. They can engage in activities such as selling stolen data, trading illegal goods and services, distributing malware, coordinating hacking campaigns, and exchanging hacking tools and techniques. Communication channels, forums, and marketplaces within the deep web serve as meeting points for cybercriminals to share information and collaborate on malicious activities.

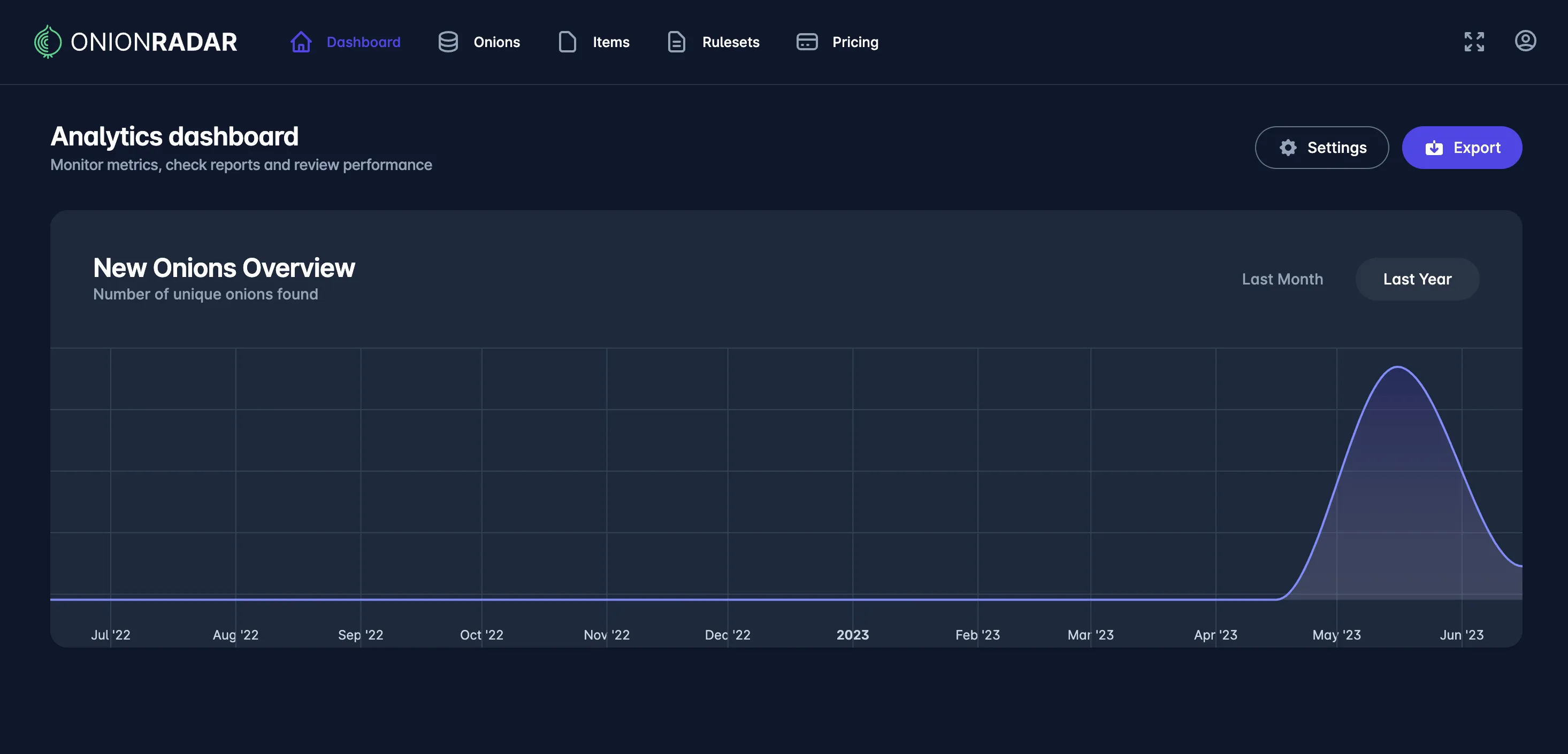

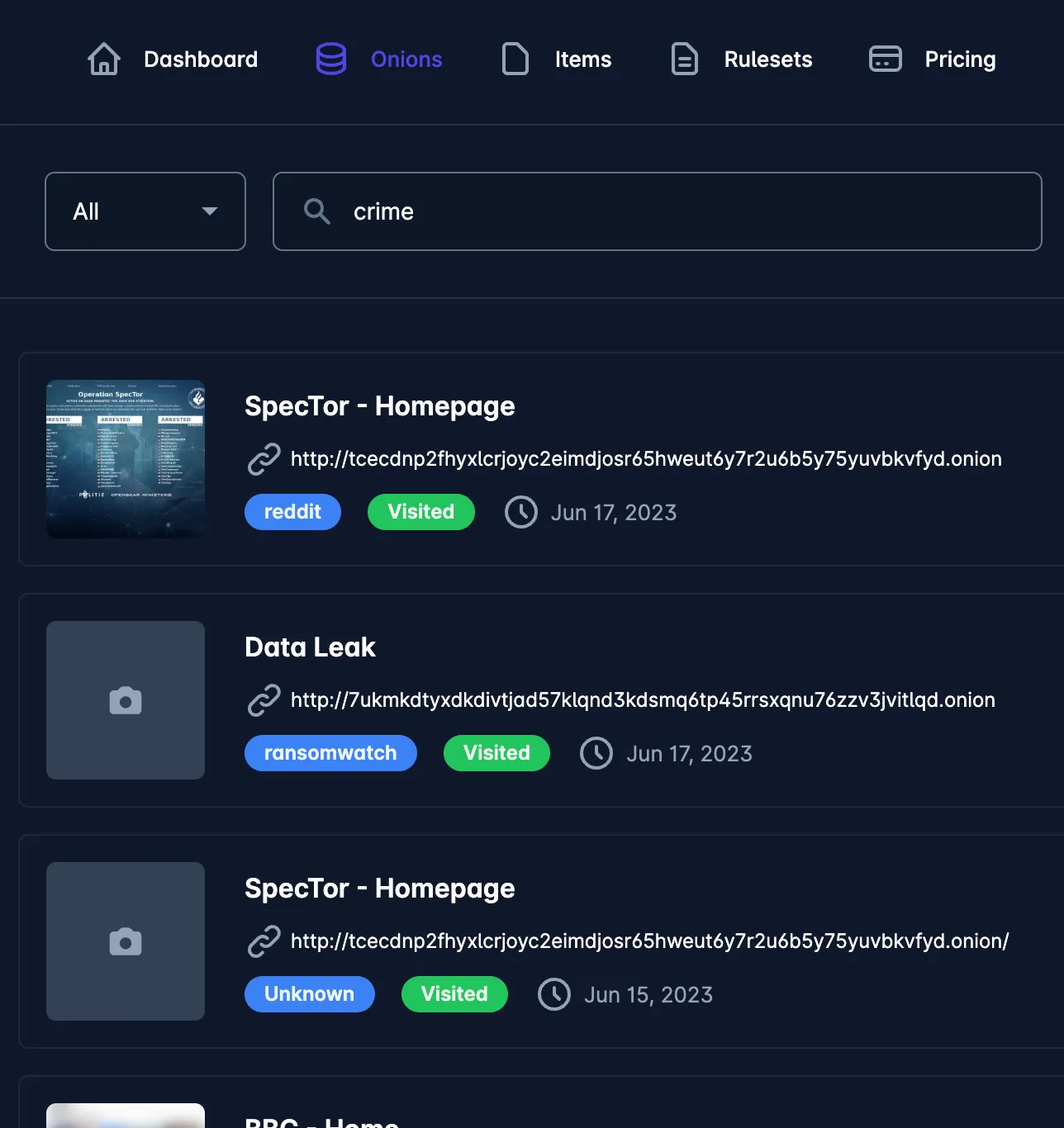

Onion Radar is a crawling system for the deep web that allow you to index the hidden web and facilitate the search and detection of potential information breaches or organized attacks that may affect your organization.

Features

- Monitor hidden services and index its contents and information.

- Perform full-text search on indexed hidden content.

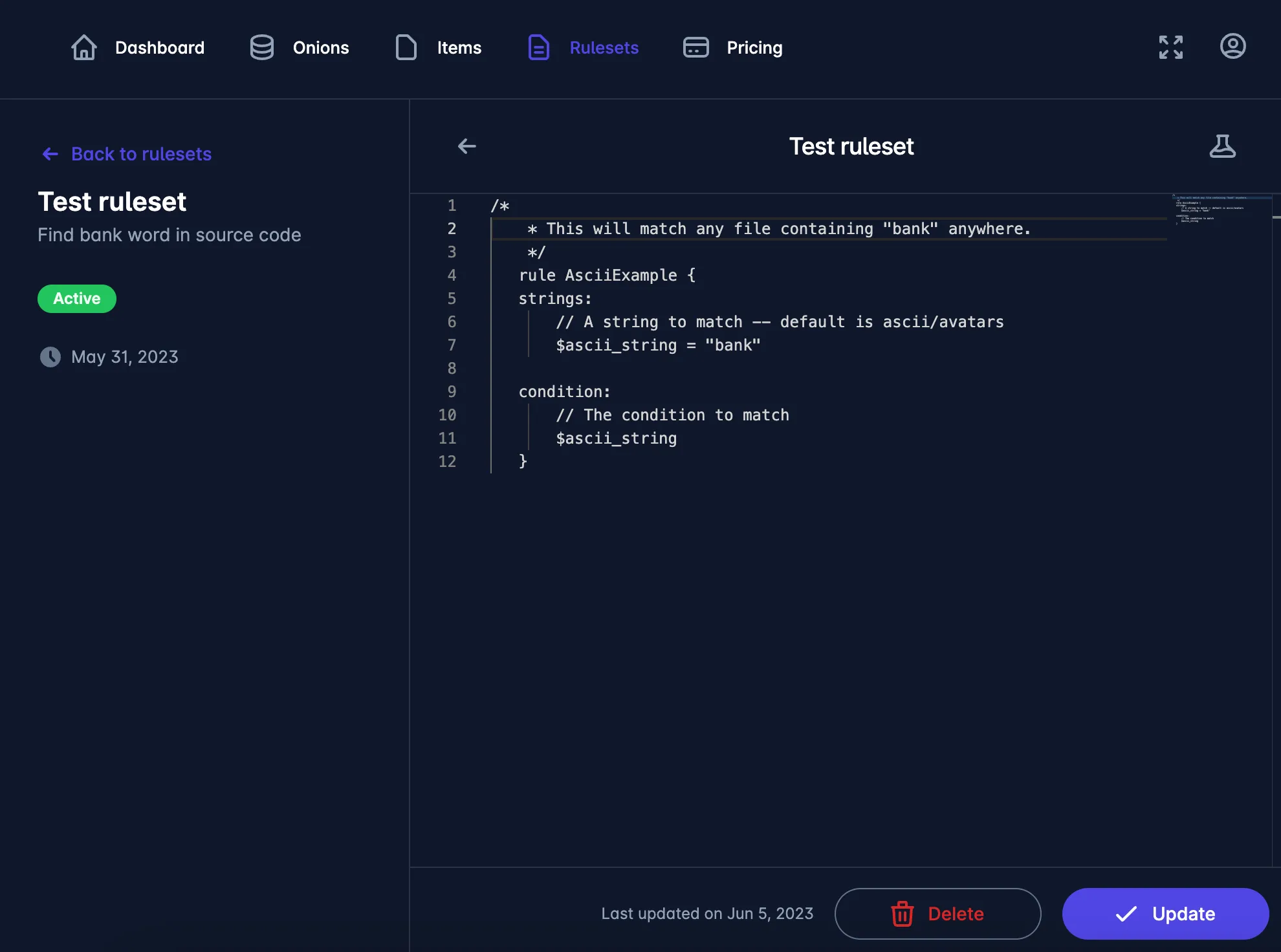

- Write custom YARA rules to match potential threats related with your brand.

- Receive notifications when your content matches potential threats.

Implemented spiders

New spiders are easy to implement using Scrapy api. Right now I implement the following spiders as sources to retrieve “.onion” links:

- Pastebin

- Underdir

- Yellowdir

- Ransomwatch

- Dark.fail

- Tor.taxi

- Ahmnia search engine

- Github

- Generic website spider (create new spiders from the admin dashboard)

Technologies

- Django

- Scrapy

- Angular 8

- Celery + Celery Beat for scheduled tasks

- Redis

- PostgreSQL